This post is an exploration of emergent complexity, showcasing a WebGPU simulation inspired by Sebastian Lague's Coding Adventure, and his "slime mold" simulation.

Introduction to WebGPU

WebGPU is a new API designed to supercede WebGL for graphics programming on the web. It has so far been implemented in Chrome based browsers since 2023 using the C++ Dawn library. Firefox supports it as of this year, using the Rust wgpu library. If the simulations in this post don't render, take a look at the browser compatibility chart and try a different browser.

The rollout of this new web API has unlocked incredible potential for writing cross-platform graphics code, with more flexibility and higher performance than WebGL has been able to offer. Most exciting to me is the ability to write general purpose GPU code (GPGPU) also known as compute shaders that run in the browser. That means I can embed the simulations directly in this blog post!

The Simulation

To start off with, this is a simulation of 1000 entities, I will call them ants, each with a position and velocity. They move around the image, and every pixel in the "pheromone" texture they step on is colored red. Each frame every pixel has it's brightness reduced by a constant amount, ants have their position updated according to their velocity, ants have their velocity direction changed by a random amount, and finally the pixel at the ant's position is set to red. Then the pheromone texture is copied to the screen by a render pass.

Under the hood, this is 2 buffers. One represents the ants as 4D vectors, where xy are position and zw are velocity. The other is the pheremone texture buffer. There are three GPU passes:

- Compute pass attenuates the brightness of each pixel in the pheromone texture.

- Compute pass simulates ant behavior and adds pheromones to the texture.

- Render pass fills canvas with a quad in the vertex shader and samples the pheromone texture in the fragment shader.

To make it look more organic, we can make the pheromones diffuse, as if dispersing through air.. We can accomplish this by blurring the texture every frame in the first compute pass. However, this introduces some additional complexity. Dimming each pixel was accomplished independently for each pixel. Each thread in the compute shader read one pixel, subtracted a constant amount from it, then wrote the new value back to the same memory location. Blurring the image requires each pixel to read the value of it's neighbors. If we overwrite a pixel with a blurred value, then the neighbor pixel can't know what the original color was. To remedy this, we can use a swap buffer, allocating two textures, reading from one, writing to the other, and swapping which is which each frame. Here, I'm using a 3x3 guassian blur kernel, 1024 ants, with a performance metric in the bottom left.

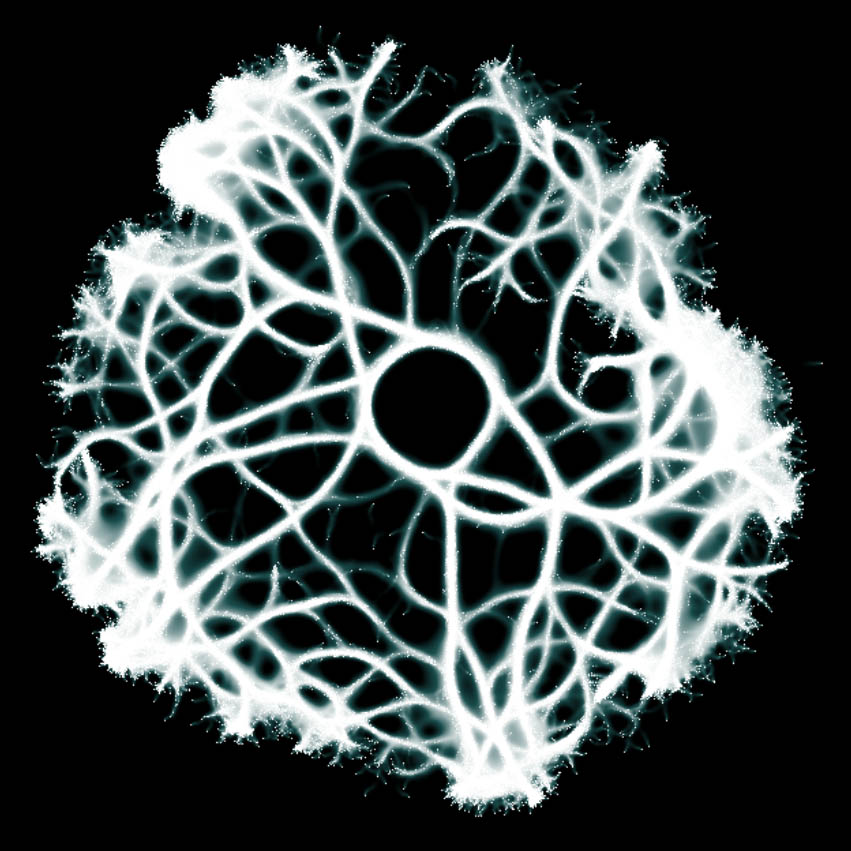

It's already looking more organic, but so far each ant is acting completely independently. For emergent behaviors to, well, emerge, the ants must influence each other's behavior. A simple behavior we can implement is steering towards pheromones. If we "sniff" pixels from the pheromone texture in front of each ant, we can steer it towards the direction with the most pheromones. I implemented this as sampling three lines, each 30 degrees apart, and steering towards whichever has the highest sum of pixels. This simulation is running 20,000 ants, each sniffing lines 10 pixels long.

I have run this with as many as 8 million ants with reasonable performance using low sample distances, however I'm not trying to stress test your device. I have another instance of this on this page which has sliders to control the parameters.